How to Create/Find A Dataset for Machine Learning?

The definition of a chatbot dataset is easy to comprehend, as it is just a combination of conversation and responses. The dataset serves as a dynamic knowledge base for the chatbot. These datasets are helpful in giving "as asked" answers to the user.

In current times, there is a huge demand for chatbots in every industry because they make work easier to handle. But the question is, where to find the chatbot training dataset? Is the selected dataset for chatbot training worthy or not? Let's find it out in the article.

Part 1. Why Do Chatbots Need Data?

The primary goal for any chatbot is to provide an answer to the user-requested prompt. But where does the answer come from? From chatbot dataset obviously! The data in the dataset can vary hugely in complexity.

They can be straightforward answers or proper dialogues used by humans while interacting. The data sources may include, customer service exchanges, social media interactions, or even dialogues or scripts from the movies.

When the data is provided to the Chatbots, they find it far easier to deal with the user prompts. When the data is available, NLP training can also be done so the chatbots are able to answer the user in human-like coherent language.

When the chatbot is given access to various resources of data, they understand the variability within the data. The cultural nuance, the slang, the variation within the language and multiple factors of human communication.

Not only large and diverse data set are helpful in getting to learn the variations within a language, but it also helps in avoiding any misinformation or biases in the information provided.

In addition to this, these large and diverse datasets provide assistance in giving a personalized answer to the user through user preference and user history data.

In a nutshell, chatbots are workable because the data is provided to them. If there is no diverse range of data made available to the chatbot, then you can also expect repeated responses that you have fed to the chatbot which may take a of time and effort.

Part 2. 6 Best Datasets for Chatbot Training

For a chatbot, it is important to have a massive amount of data to be trained on. There are multiple types of datasets on which the chatbot can be trained. Here are a few of them:

Question Answer Datasets for Chatbot

As the name says, these datasets are a combination of questions and answers. An example of one of the best question-and-answer datasets is WikiQA Corpus, which is explained below.

1.The WikiQA Corpus:

The WikiQA corpus is a dataset which is publicly available and it consists of sets of originally collected questions and phrases that had answers to the specific questions. There was only true information available to the general public who accessed the Wikipedia pages that had answers to the questions or queries asked by the user.

Customer Support Datasets for Chatbot

Customer support data is a set of data that has responses, as well as queries from real and bigger brands online. This data is used to make sure that the customer who is using the chatbot is satisfied with your answer.

2.Customer Support On Twitter:

This dataset is available on Kaggle. The dataset has more than 3 million tweets and responses from some of the priority brands on Twitter. This amount of data is really helpful in making Customer Support Chatbots through training on such data.

Dialogue Datasets for Chatbot

Dialogue-based Datasets are a combination of multiple dialogues of multiple variations. The dialogues are really helpful for the chatbot to understand the complexities of human nature dialogue.

3.Multi-Domain Wizard of OZ Dataset (MultiWOZ)

This is a huge Dialogue dataset where training can be performed. In this Dataset, you can find more than 10,000 dialogues. In the dialogues, a wide range of topics are covered. It is a set of complex and large data that has several variations throughout the text.

Multi-Lingual Datasets for Chatbot

As the name says, the datasets in which multiple languages are used and transactions are applied, are called multilingual datasets.

4.Nus Corpus:

The corpus was made for the translation and standardization of the text that was available on social media. It is built through a random selection of around 2000 messages from the Corpus of Nus and they are in English. After that, they are translated into formal Chinese.

Emotion and Sentiment Dataset for Chatbot

The datasets or dialogues that are filled with human emotions and sentiments are called Emotion and Sentiment Datasets.

5.Friends TV Show Emotion Dataset:

The TV show Friends is known to the world. It has a dataset available as well where there are a number of dialogues that shows several emotions. When training is performed on such datasets, the chatbots are able to recognize the sentiment of the user and then respond to them in the same manner.

Intent Classification Dataset for Chatbot

This kind of Dataset is really helpful in recognizing the intent of the user. It is filled with queries and the intents that are combined with it.

6.Snips NLU:

The above-mentioned dataset for chatbot training is a set of quarries and the intents associated with it. For example, booking a ride, or putting a date for an event. By continuous training on the available dataset, the chatbot recognizes the intent of the user and respond to them accurately with current guess on the nation.

Part 3. How To Create Your Own Dataset?

Way 1. Collect the Data that You Already Have in The Business

It is not at all easy to gather the data that is available to you and give it up for the training part. The data that is used for Chatbot training must be huge in complexity as well as in the amount of the data that is being used.

You must gather a huge corpus of data that must contain human-based customer support service data. The communication between the customer and staff, the solutions that are given by the customer support staff and the queries.

You can get this dataset from the already present communication between your customer care staff and the customer. It is always a bunch of communication going on, even with a single client, so if you have multiple clients, the better the results will be.

Once the dataset is available, it is cleaned and organized after which through machine learning and NLP techniques, the development of a chatbot is carried out which then becomes an efficient way of dealing with the issues that the customers are facing.

Way 2. Generate Training Data for Chatbots Using ChatGPT

What is it that ChatGPT cannot do? Nothing! ChatGPT itself being a chatbot is able of creating datasets that can be used in another business as training data.

Currently, multiple businesses are using ChatGPT for the production of large datasets on which they can train their chatbots. These chatbots are then able to answer multiple queries that are asked by the customer.

In order to use ChatGPT to create or generate a dataset, you must be aware of the prompts that you are entering. For example, if the case is about knowing about a return policy of an online shopping store, you can just type out a little information about your store and then put your answer to it.

In response to your prompt, ChatGPT will provide you with comprehensive, detailed and human uttered content that you will be requiring most for the chatbot development.

Way 3. Open Source Data

Open Source datasets are available for chatbot creators who do not have a dataset of their own. It can also be used by chatbot developers who are not able to create Datasets for training through ChatGPT.

There are multiple online and publicly available and free datasets that you can find by searching on Google. There are multiple kinds of datasets available online without any charge.

You can just download them and get your training started. As mentioned above, WikiQA is a set of question-and-answer data from real humans that was made public in 2015. It is still available to the public now but has evolved a lot. A huge amount of data has been changed and added to the Dataset.

Part 4. How Much Data Do You Need?

Before we discuss how much data is required to train a chatbot, it is important to mention the aspects of the data that are available to us. Ensure that the data that is being used in the chatbot training must be right. You can not just get some information from a platform and do nothing.

Clean the data if necessary, and make sure the quality is high as well. Although the dataset used in training for chatbots can vary in number, here is a rough guess. The rule-based and Chit Chat-based bots can be trained in a few thousand examples. But for models like GPT-3 or GPT-4, you might need billions or even trillions of training examples and hundreds of gigs or terabytes of data.

Part 5. Difference between Dataset & A Knowledge Base for Training Chatbots

The dataset can be explained as a collection of examples that are used to train the specific chatbot, The dataset is more sort of an input and output, where Algorthim learns what to reply on which prompt according to the output it has been trained on. The more divers the data is, the better the training of the chatbot.

On the other hand, Knowledge bases are a more structured form of data that is primarily used for reference purposes. It is full of facts and domain-level knowledge that can be used by chatbots for properly responding to the customer. It is more about giving true and factual responses to the user.

Part 6. Example Training for A Chatbot

To understand the training for a chatbot, let’s take the example of Zendesk, a chatbot that is helpful in communicating with the customers of businesses and assisting customer care staff.

Once you are able to identify what problem you are solving through the chatbot, you will be able to know all the use cases that are related to your business. In our case, the horizon is a bit broad and we know that we have to deal with "all the customer care services related data".

When you are able to get the data, identify the intent of the user that will be using the product. For each intent type, make a separate response. Make use of utterance here.

The next step would be to have a diverse team of individuals who are capable AI developers and are able to train what you provide, The bot training team can ask multiple questions to it and it can help in making more efficient with the queries that the users ask about business.

It is the point when you are done with it, make sure to add key entities to the variety of customer-related information you have shared with the Zendesk chatbot.

After that, select the personality or the tone of your AI chatbot, In our case, the tone will be extremely professional because they deal with customer care-related solutions.

In the end, do make sure that the training is carried on even after the chatbot is running. Go for continuous training.

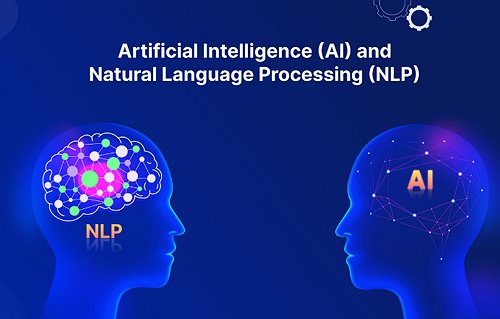

Part 7. Understanding of NLP and Machine Learning

AI is a vast field and there are multiple branches that come under it. Machine Learning is one of them. Machine learning is just like a tree and NLP (Natural Language Processing) is a branch that comes under it. NLP s helpful for computers to understand, generate and analyze human-like or human language content and mostly.

Written texts are provided to make things work. While machine learning has multiple topics under it. For example, prediction, supervised learning, unsupervised learning, classification and etc. Machine learning itself is a part of Artificial intelligence, It is more into creating multiple models that do not need human intervention.

Final Thoughts

AI and Machine Learning are becoming an inseparable part of this world’s digital journey, At this point in time, AI chatbot like ChatInsight has become increasingly popular and people are on their journey to learn the science behind everything that is related to AI and machine learning.

It includes studying data sets, training datasets, a combination of trained data with the chatbot and how to find such data. The above article was a comprehensive discussion of getting the data through sources and training them to create a full fledge running chatbot, that can be used for multiple purposes.

Leave a Reply.